Speed up Python with CFFI

2023-05-13 (last update on 2023-05-20)

A real-life example script

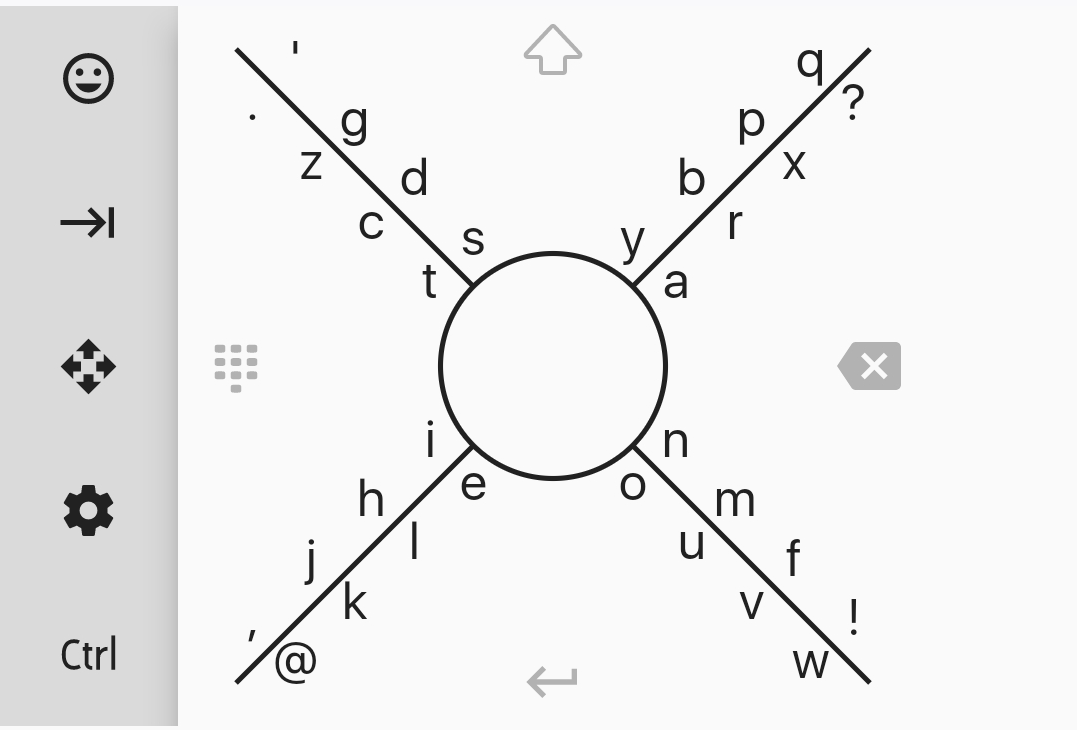

When I stumbled over the Android keyboard "8VIM" (not really related to vim), I noticed that the included keyboard layout for the German language wasn't designed for German at all. It seems to be the English layout with German umlauts added. I searched for a better German layout and found this great layout optimizer: 8vim_keyboard_layout_calculator. It's written in Python and can optimize not only for a certain language but also for combinations of multiple languages, for example 80 % German and 20 % English.

Screenshot of the 8VIM keyboard for Android

The only pain point is that it takes a lot of time calculating a score for millions of possible keyboard layouts. With the default configuration, the script runs forever (="too long to wait for it") using CPython (the "default" Python interpreter). But the author of that project recommends PyPy. In case you don't know PyPy: It's a faster Python interpreter with a built-in just-in-time compiler. The script finishes in about 13 minutes (on my laptop) using PyPy.

In this article I'm going to show how to speed up the optimizer even further by rewriting the bottlenecks in C and calling them using CFFI (C Foreign Function Interface).

Profile the current state

Let's use cProfile for obtaining a profile on function level and use the PyPy interpreter as reference.

pypy3 -m cProfile --sort=tottime main.py > cProfile_pypy_full.txt

The functions the script spends the most time in:

9818987476 function calls (9818986189 primitive calls) in 1993.894 seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

42 1535.444 36.558 1938.753 46.161 main.py:750(getLayoutScores)

800938549 171.257 0.000 171.257 0.000 {method 'as_integer_ratio' of 'float' objects}

6981740769 87.377 0.000 87.377 0.000 {built-in function ord}

283 44.404 0.157 249.999 0.883 statistics.py:123(_sum)

283 30.327 0.107 287.821 1.017 statistics.py:295(mean)

11064 29.921 0.003 33.954 0.003 {method 'extend' of 'list' objects}

283 28.593 0.101 28.593 0.101 main.py:833(<listcomp>)

22 19.125 0.869 53.077 2.413 main.py:856(combinePermutations)

1505949 16.920 0.000 17.439 0.000 main.py:727(testSingleLayout)

800938832 16.794 0.000 188.051 0.000 statistics.py:219(_exact_ratio)

800938832 7.489 0.000 7.489 0.000 main.py:830(<genexpr>)

423399000 4.033 0.000 4.033 0.000 main.py:861(<genexpr>)

4463268 0.705 0.000 0.705 0.000 {method 'join' of 'str' objects}

4805 0.454 0.000 0.950 0.000 main.py:897(performLetterSwaps)

60 0.135 0.002 0.303 0.005 main.py:621(getPermutations)

2 0.127 0.063 18.553 9.277 main.py:866(greedyOptimization)

93 0.126 0.001 0.244 0.003 main.py:493(getBigrams)

27 0.122 0.005 0.122 0.005 {built-in function compile}

65 0.050 0.001 316.470 4.869 main.py:817(getTopScores)

You can see that most time is spent in the function getLayoutScores. cumtime is the time from entering a function until returning from a function (all function calls added up). tottime excludes the time spent in subfunctions. The difference between these two numbers (about 403 seconds) seems to be mostly the time for executing getTopScores. But if you look close at the code inside getLayoutScores, you see that the inner for-loops look like a copy of the content of the function testSingleLayout. For a "fair" profiling result, I have replaced this code with a testSingleLayout function call (see the diff of this change in my fork of the project). This causes about 20 million additional function calls. This might be the reason the original code doesn't call a function here.

After this change, the code runs even a few seconds faster (minus 8 seconds, tested with PyPy, might be variance). That's the result of running the profiler again:

10242476276 function calls (10242474989 primitive calls) in 2200.136 seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

424994749 1744.985 0.000 1822.728 0.000 main.py:727(testSingleLayout)

800938549 170.613 0.000 170.613 0.000 {method 'as_integer_ratio' of 'float' objects}

6981740769 77.744 0.000 77.744 0.000 {built-in function ord}

283 45.276 0.160 249.591 0.882 statistics.py:123(_sum)

283 32.929 0.116 289.950 1.025 statistics.py:295(mean)

11064 30.421 0.003 34.578 0.003 {method 'extend' of 'list' objects}

283 27.972 0.099 27.972 0.099 main.py:827(<listcomp>)

42 20.249 0.482 2140.581 50.966 main.py:750(getLayoutScores)

22 19.739 0.897 54.315 2.469 main.py:850(combinePermutations)

800938832 16.300 0.000 186.914 0.000 statistics.py:219(_exact_ratio)

800938832 7.423 0.000 7.423 0.000 main.py:824(<genexpr>)

423399000 4.157 0.000 4.157 0.000 main.py:855(<genexpr>)

4463268 0.745 0.000 0.745 0.000 {method 'join' of 'str' objects}

4805 0.499 0.000 1.036 0.000 main.py:891(performLetterSwaps)

2 0.145 0.072 21.578 10.789 main.py:860(greedyOptimization)

60 0.131 0.002 0.304 0.005 main.py:621(getPermutations)

27 0.129 0.005 0.129 0.005 {built-in function compile}

93 0.122 0.001 0.230 0.002 main.py:493(getBigrams)

65 0.049 0.001 317.975 4.892 main.py:811(getTopScores)

Now it's obvious that the function testSingleLayout eats up most of the time and thus it might be worth replacing it with a faster version of it in C.

Migrate the bottleneck to C

Write the C code

The C code looks not much different than the Python code of this function:

cffi_extension.c:

#include "cffi_extension.h"

double test_single_layout(char* layout, int layout_length, Bigram* bigrams,

int bigrams_count, double* score_list)

{

int ascii_array[256] = {0};

for (int j = 0; j < layout_length; j++) {

ascii_array[(unsigned char)layout[j]] = j;

}

double score = 0.0;

for (int i = 0; i < bigrams_count; i++) {

Bigram bigram = bigrams[i];

int row = ascii_array[bigram.letter1AsciiCode];

int column = ascii_array[bigram.letter2AsciiCode];

score += bigram.frequency * score_list[row*32 + column];

}

return score;

}

The main differences:

ascii_arrayis defined in the function instead of passing in an empty array.SCORE_LIST(in Python) is an array of a fixed number of arrays of a fixed number of constant values. For simplicity, I transform it to a one-dimensional array in Python.- The

Bigramclass is not known to my C function. I have defined a struct with the necessary members in the header file.

cffi_extension.h:

typedef struct Bigram {

int letter1AsciiCode;

int letter2AsciiCode;

double frequency;

} Bigram;

double test_single_layout(char* layout, int layout_length, Bigram* bigrams,

int bigrams_count, double* score_list);

Call the C code in the Python script

First, we need a build script for our C extension:

cffi_extension_build.py:

from cffi import FFI

ffibuilder = FFI()

ffibuilder.cdef("""\

typedef struct Bigram {

int letter1AsciiCode;

int letter2AsciiCode;

double frequency;

} Bigram;

double test_single_layout(char* layout, int layout_length, Bigram* bigrams,

int bigrams_count, double* score_list);

""")

ffibuilder.set_source("_cffi_extension", # name of the output C extension

"""

#include "cffi_extension.h"

""",

sources=['cffi_extension.c'],

libraries=[])

if __name__ == "__main__":

ffibuilder.compile(verbose=True)

This looks quite similar to the example in the official Python CFFI documentation.

Execute it with (to compile the C code):

pypy3 ./testSingleLayout_extension_build.py

This creates these files:

_cffi_extension.c_cffi_extension.o_cffi_extension.pypy39-pp73-x86_64-linux-gnu.so(or similar)

The last one is the one we actually use in our Python script. By the way, this library works only with PyPy. If you want to use an extension in CPython, you need to build it with CPython (python ./cffi_extension_build.py).

In the Python script (main.py), the library must be imported before using it:

from cffi._cffi_extension.lib import test_single_layout

from cffi._cffi_extension import ffi

Further steps:

- Create a one-dimensional array

LINEAR_SCORE_LIST:

LINEAR_SCORE_LIST = list(itertools.chain.from_iterable(SCORE_LIST))

- Create a C

Bigramarray from the Pythonbigramslist.

bigr_count = len(bigrams)

bigr = ffi.new("Bigram[]", bigr_count)

for i, b in enumerate(bigrams):

bigr[i].letter1AsciiCode = b.letter1AsciiCode

bigr[i].letter2AsciiCode = b.letter2AsciiCode

bigr[i].frequency = b.frequency

- Create a C char array/pointer from every layout string:

char_list = permutatedLayout.encode('latin1')

The actual function call:

permutatedScore = test_single_layout(char_list, len(permutatedLayout), bigr, bigr_count, LINEAR_SCORE_LIST)

See all changes as a diff in commit f319dd6 in my fork of 8vim_keyboard_layout_calculator.

Run the optimizer again

With all the changes above applied, the optimizer now needs 9m20s to complete. That's about 28 % less than the original code.

Let's run the profiler again:

3685799907 function calls (3685798615 primitive calls) in 616.013 seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

42 245.056 5.835 575.001 13.690 main.py:760(getLayoutScores)

800938549 171.205 0.000 171.205 0.000 {method 'as_integer_ratio' of 'float' objects}

283 44.527 0.157 249.782 0.883 statistics.py:123(_sum)

283 33.062 0.117 290.387 1.026 statistics.py:295(mean)

11064 30.327 0.003 34.429 0.003 {method 'extend' of 'list' objects}

283 27.182 0.096 27.182 0.096 main.py:854(<listcomp>)

22 20.487 0.931 54.913 2.496 main.py:877(combinePermutations)

800938832 16.331 0.000 187.536 0.000 statistics.py:219(_exact_ratio)

800938832 7.536 0.000 7.536 0.000 main.py:851(<genexpr>)

425062726 6.267 0.000 6.267 0.000 {method 'encode' of 'str' objects}

426654096/426653914 6.167 0.000 6.167 0.000 {built-in function len}

423399000 4.102 0.000 4.102 0.000 main.py:882(<genexpr>)

2 1.771 0.885 2.789 1.394 main.py:887(greedyOptimization)

4463269 0.712 0.000 0.712 0.000 {method 'join' of 'str' objects}

4805 0.434 0.000 0.930 0.000 main.py:940(performLetterSwaps)

60 0.127 0.002 0.304 0.005 main.py:627(getPermutations)

93 0.121 0.001 0.226 0.002 main.py:499(getBigrams)

28 0.112 0.004 0.112 0.004 {built-in function compile}

65 0.049 0.001 317.623 4.887 main.py:838(getTopScores)

Now it's harder to see how to go on. Most time is spent in getLayoutScores. But of that time, most time (cumtime - tottime ≈ 330 seconds) is spent in subfunctions. And most of that time is spent in getTopScores. (You can guess this by looking into the code of getLayoutScores.) getTopScores also contains the calls to statistics.py (lines 3 and 4 in the profiling table). That's why we next migrate getTopScores to C.

Migrate getTopScores to C

Same procedure as for testSingleLayout. I won't copy the functions here. Just have a look at the diff of commit b0473b2.

The interesting parts of this migration

A function can't return a tuple of a tuple and an array in C (as the Python version of this function does). So we pass additional pointers as input arguments which allow the function to modify the objects the pointers point to.

We can't simply pass a list of strings (for the layouts) to the new C function. We need to create a list of char[] ffi objects and then an ffi pointer to that list:

bytes_layouts = [ffi.new("char[]", layout.encode("latin1")) for layout in layouts]

layouts_pointer = ffi.new("char*[]", bytes_layouts)

This char*[] object is compatible with the char** parameter expected by get_top_scores.

The Python version of getTopScores creates a list of indices of the layouts/scores to keep. This list is replaced with a shorter version of it via list comprehension multiple times. The C replacement indices_to_keep doesn't shrink. It simply sets additional indices to zero in every loop iteration.

In some calls to get_top_scores the layout_count is greater than 20 million. That's why we need to use the long data type. And we can't create such large arrays on the stack. So we have to use malloc to create the indices_to_keep array on the heap.

Final result

With all the changes above applied, the optimizer now needs 5m56s to complete. That's about 54 % less than the original code or more than double as fast.

Glitchy-Tozier asked me to change some things when he reviewed my pull request. One change had a great impact on performance:

I had transformed SCORE_LIST – a list of arrays – into a one-dimensional list before passing it to test_single_layout (because I didn't know how pass a nested array to a CFFI function). This made it necessary to calculate the index of the flattened list which cost some performance.

After the changes made in commit 1814d6c the optimizer needs only 4m16s. Thus, now it's three times as fast as without CFFI extension.